Mark59 Performance and Volume Testing Framework

Mark59 version 6.4 is now available. This release is compatible with JMeter 5.6.3 View the Downloads section for more details on the release

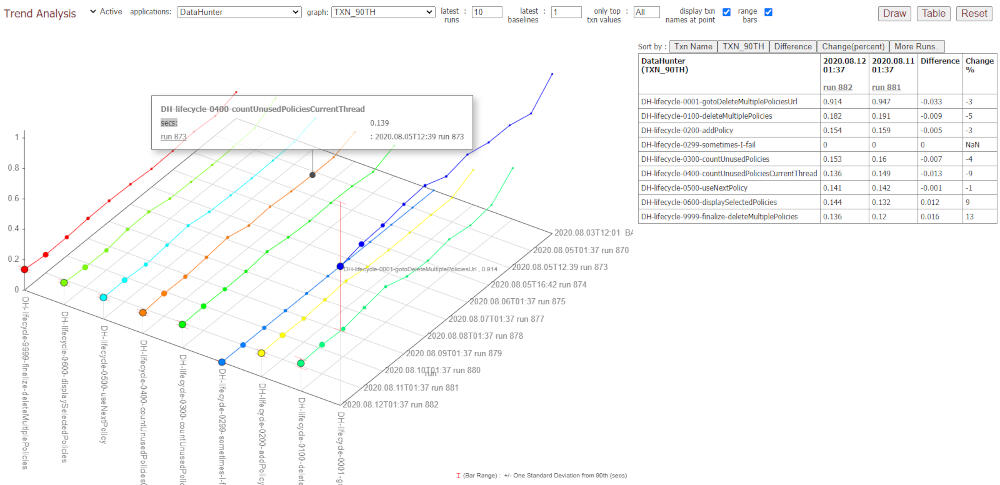

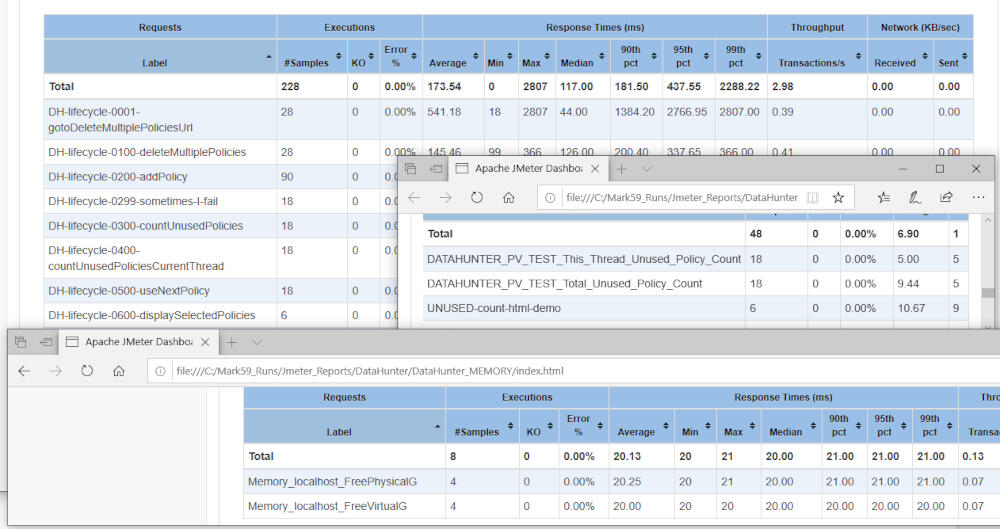

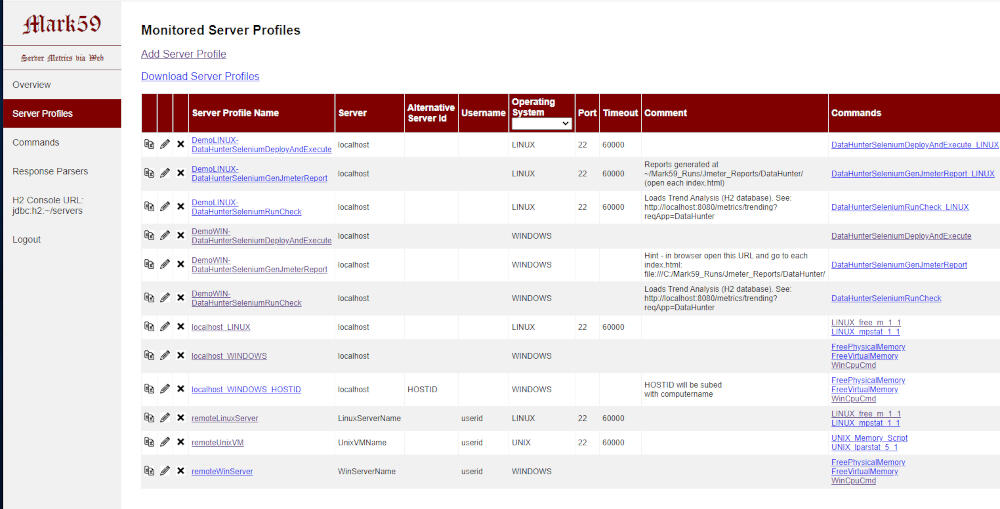

The Mark59 Performance and Volume Framework simplifies JMeter performance testing via automated test execution, reporting, SLA comparison and trend analysis.

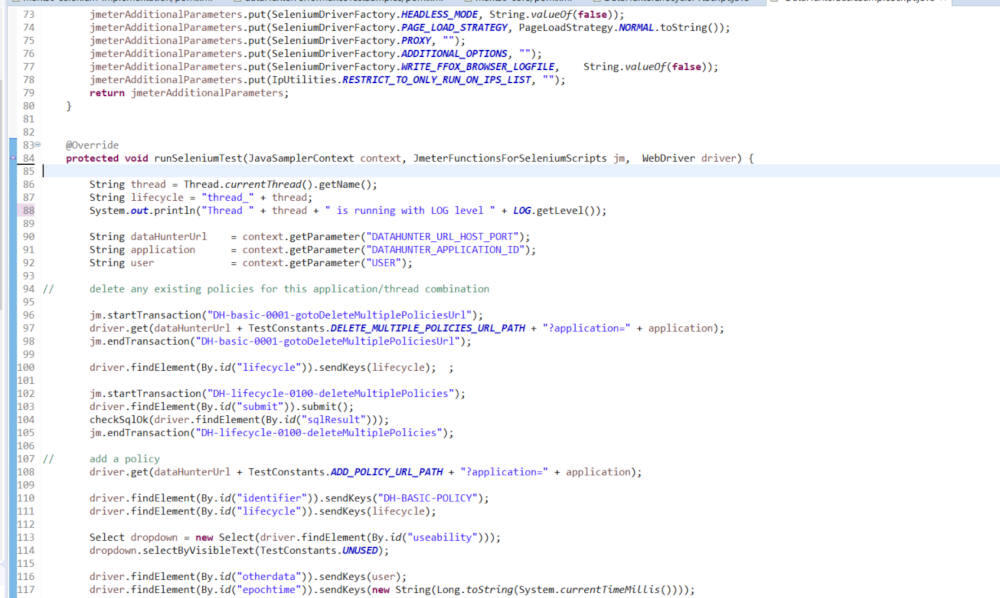

Java Selenium or Playwright can also be incorporated to accelerate script development.

Overview

Mark59 is an open-source Java-based set of tools, with the aim of providing the capability of performance testing applications in a regular, repeatable manner, by having a focus on the automated detection of SLA breaches in a test, and highlighting trends and issues with the application under test over time. It is designed so that this is achievable without necessarily needing to have purely specialist performance test skills.We’ve avoided calling Mark59 a ‘framework’, as that word is often used in test automation to describe ‘clever’ software hiding or overlying core technologies, so people using them don't have a fair chance to feel motivated and to learn the proper skills they need in the industry. Rather, for example, our integration of two popular products in test automation, JMeter and Selenium (or Playwright), along with the work we have put into our examples and documentation, hopefully gives Performance Testers and Automation Testers an insight into scripting skills that can be easily learnt and benefit each other’s skill sets.Mark59 was designed to run on Windows or Linux-based operating systems, and is compatible with Macs.

Sample Screens:

Downloads

Select the appropriate zip version to download the executable jar files and samples. Linux and Windows compatible.As of Mark59 v3+ all projects are contained in a single zip file.If you are looking for the source code, see: https://github.com/mark-5-9/mark59Details of release changes can be found at: https://github.com/mark-5-9/mark59?tab=readme-ov-file#releases

Current Release: Version 6.4 (August 2025)

Download: mark59-6.4.zip

(632.9 MB download via Google Drive link)Release Summary:

UI Scripting: By default, Exception stack traces have always been written to the console and the log4j log (as well as to a log file if parameter ONEXCEPTIONWRITESTACKTRACE is true). This can now be suppressed by setting the new additionalTestParameters ONEXCEPTIONWRITESTACKTRACETOCONSOLE and ONEXCEPTIONWRITESTACKTRACETOLOG4J_LOGGER to false.

Trends Load : New parameter 'maxNumberofruns' (n) : Maximum number of runs to be stored for this application id excluding baselines. The oldest non-baseline run(s) will be removed from the database when this count is exceeded. Set to '-1' or '0' to deactive. Defaults to 500

DataHunter : (Bug) Remove an unnecessary SQL when processing Reusable Indexed' data

All Web Apps : Explicitly use GetMapping or PostMapping anotation instead of RequestMapping, plus many smaller code and JavaDocs tidy ups

Dependencies Updated: spring-boot to 3.5.4, selenium to 4.34.0 (to chrome v138), playwright to 1.53.0

Summary of Changes with Potential Incompatibilities For this Release

For UI scripting BROWSEREXECUTABLE argument is no longer in use. Please use OVERRIDEPROPERTYMARK59BROWSER_EXECUTABLE

Current Release: Version 6.3 (March 2025)

Download: mark59-6.3.zip

(630.5 MB download via Google Drive link)Release Summary:

DataHunter : New 'Reusable Indexed' datatype introduced

DataHunter : Extra "Identifier Like' filter for Multiple Item Selection

Dependencies Updated: spring-boot to 3.4.3, selenium to 4.29.0 (to chrome v133), playwright to 1.50.0, jackson xmlapis to 2.16.1, okhttp to 4.12.0, org.json to 20250107, commons-beanutils to 1.10.0

View https://github.com/mark-5-9/mark59 for additional details and older releases.

Documentation

Our Mark59 User Guide documentation can be found below:

Mark59 User Guide for Version 6 PDF (6.9 MB download via Google Drive link)

Mark59 User Guide for Version 6 GDOC (6.4 MB Google Doc)

Get a feeling of how it works in a few minutes by going through the 'Quick Start' chapters.

About

The Background to Mark59

Mark59 started from some ideas conceived around 2014 and has since developed to our latest release. It was developed by a team of working Performance and Volume testers at the Australian Insurance Company IAG in Melbourne. Our team, more out of necessity to maintain multiple and varied applications, over time changed practices from a traditional way of testing to something very similar to what is now called Dev Ops, and created a set of tools on the way that has become Mark59.A core team has worked on the project for most of its life, but many, many ideas came from the excellent Performance Testers that have been part of the team over the years. Not to mention (the sometimes rather blunt but valuable) feedback we have received from our client projects and others. We hope we haven't missed too many from the acknowledgements, but great ideas and suggestions have come to us from many, so we fear we have.

The People

The Core Team:

Philip Webb

Dhivya Raghavan

Greg Johnstone

Major Contributors:

Michael Cohen

John Gallagher

Sanman Basavegowda

David Nguyen

Edmond Lew

Grateful Acknowledgements:

Khushal Rawal

Stephen Townshend

Srivalli Krishnardhula

Pankaj Harde

Mallamma Ganigi

Gaurav Shukla

Nikolai Chetverikov

The name 'Mark59'

The biblical story of the 'Exorcism of the Gerasene demonic' appears in the New Testament in all of the synoptic gospels (Mathew, Mark and Luke), but the most well known account is from Mark's gospel. At a critical point in the story Jesus challenges the demon in a possessed man to name itself, and discovers he is not facing one demon but many when the famous reply comes "My name is Legion, for we are many" (Mark 5:9).We couldn't help relating our (admittedly trivial) struggles with turning a single Selenium script into many with this wonderful story, and so 'mark59.com'.

Contact

You can contact us to give suggestions or feedback via the form below.

Note that we are a small working team, but we will do our best to respond.